Arize integration

Effective August 1, 2024 Anyscale Endpoints API will be available exclusively through the fully Hosted Anyscale Platform. Multi-tenant access to LLM models will be removed.

With the Hosted Anyscale Platform, you can access the latest GPUs billed by the second, and deploy models on your own dedicated instances. Enjoy full customization to build your end-to-end applications with Anyscale. Get started today.

Arize is a machine learning observability platform to help unpack the proverbial AI black box.

Integrate Anyscale Endpoint with Arize for better observability for LLM applications.

To integrate with Arize, you need to create an Arize callback handler first. Get ARIZE_SPACE_KEY and ARIZE_API_KEY from Arize console, then run following code to create the callback handler.

#Install Arize dependencies

#pip3 install arize

#pip3 install langchain>=0.0.336

from langchain.callbacks.arize_callback import ArizeCallbackHandler

ARIZE_SPACE_KEY = "YOUR_ARIZE_SPACE_KEY"

ARIZE_API_KEY = "YOUR_ARIZE_API_KEY"

if ARIZE_SPACE_KEY == "YOUR_ARIZE_SPACE_KEY" or ARIZE_API_KEY == "YOUR_ARIZE_API_KEY":

raise ValueError("❌ CHANGE SPACE AND API KEYS")

# Define callback handler for Arize

arize_callback = ArizeCallbackHandler(

model_id="anyscale-arize-demo",

model_version="1.0",

SPACE_KEY=ARIZE_SPACE_KEY,

API_KEY=ARIZE_API_KEY

)

This example uses LangChain to create ChatAnyscale models with all the models supported by Anyscale Endpoints.

from langchain.schema import SystemMessage, HumanMessage

from langchain.chat_models import ChatAnyscale

ANYSCALE_ENDPOINT_TOKEN = "YOUR_ANYSCALE_TOKEN"

messages = [

SystemMessage(

content="You are a helpful AI that shares everything you know."

),

HumanMessage(

content="How to evaluate the value of a NFL team"

),

]

chats = {

model: ChatAnyscale(anyscale_api_key=ANYSCALE_ENDPOINT_TOKEN,model_name=model, temperature=0.7)

for model in ChatAnyscale.get_available_models(anyscale_api_key=ANYSCALE_ENDPOINT_TOKEN)

}

Next, invoke the chat model with prompt messages and set callbacks to Arize callback handlers.

for model, chat in chats.items():

response = chat.predict_messages(messages, callbacks=[arize_callback])

print(model, "\n", response.content)

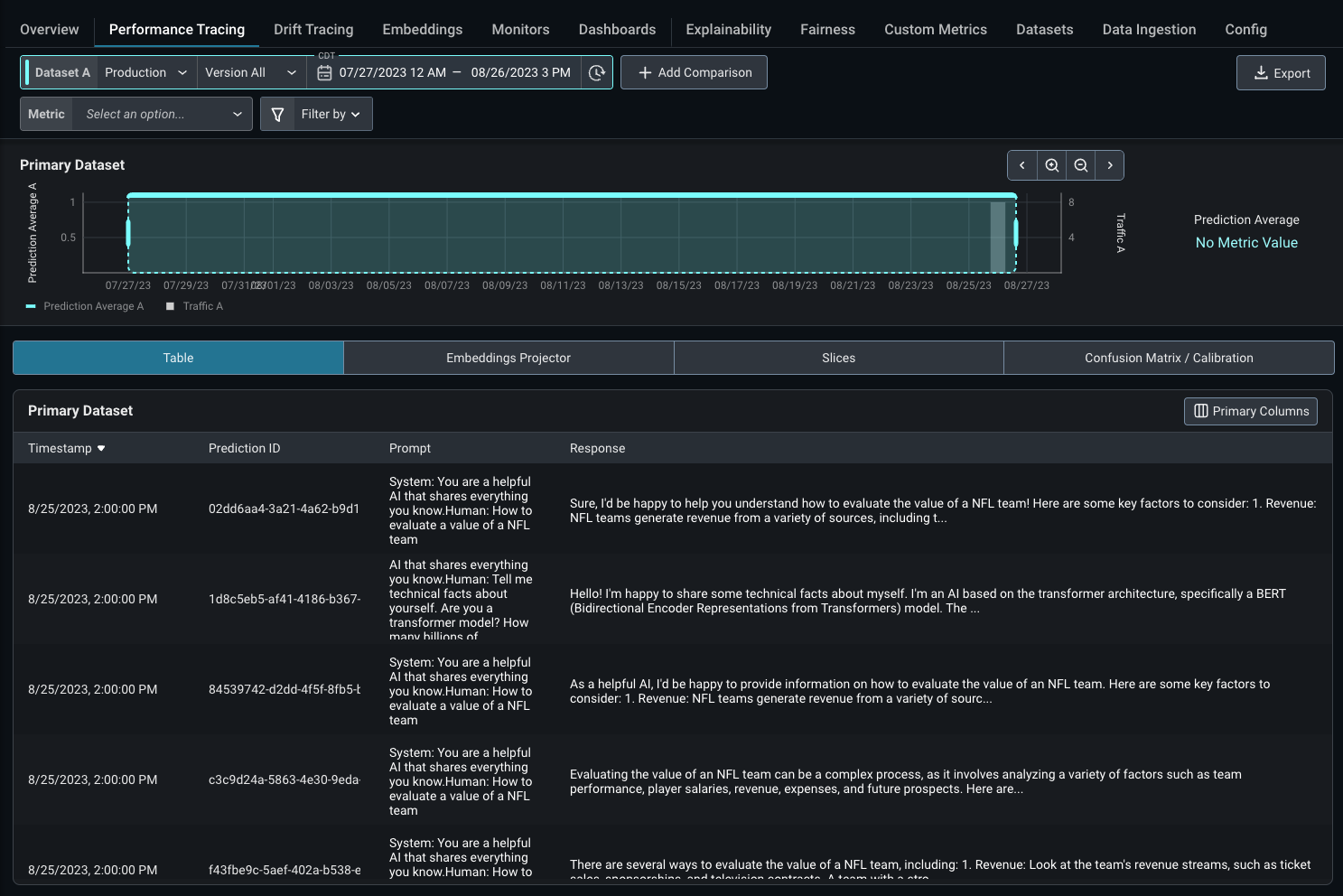

Here is a screenshot of the Arize dashboard with prompts and responses.

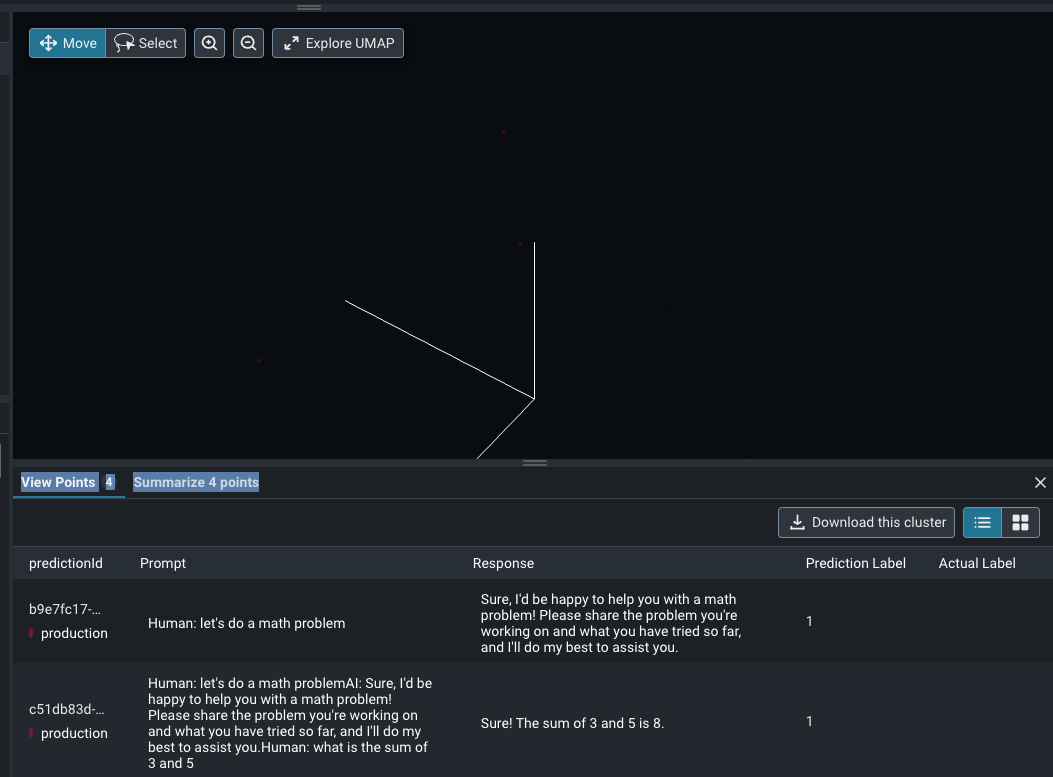

You can also visualize the embedding results with UMAP.