Weights and Biases integration

Effective August 1, 2024 Anyscale Endpoints API will be available exclusively through the fully Hosted Anyscale Platform. Multi-tenant access to LLM models will be removed.

With the Hosted Anyscale Platform, you can access the latest GPUs billed by the second, and deploy models on your own dedicated instances. Enjoy full customization to build your end-to-end applications with Anyscale. Get started today.

Weights and Biases (WandB) is a platform that allows users to track and visualize various aspects of their model training and inference performance in real time.

You can use WandB Weave to monitor LLM performances.

First, install the weave, openai, and tiktoken libraries.

pip install weave openai tiktoken

Log in to WandB and initialize the monitor process.

import wandb

from weave.monitoring import init_monitor

# Log in WandB

wandb.login()

ANYSCALE_ENDPOINT_TOKEN = "YOUR_ANYSCALE_TOKEN"

# Settings

WB_ENTITY = YOUR_WB_ENTITY

WB_PROJECT = "anyscale-endpoint"

STREAM_NAME = "llama2_logs"

m = init_monitor(f"{WB_ENTITY}/{WB_PROJECT}/{STREAM_NAME}")

Fill the stream table with sample logs. After running the code snippet below, look at the logs from http://weave.wandb.ai.

from weave.monitoring import openai

response = openai.ChatCompletion.create(

api_base="https://api.endpoints.anyscale.com/v1",

api_key=ANYSCALE_ENDPOINT_TOKEN,

model="meta-llama/Llama-2-70b-chat-hf",

messages=[

{"role": "user",

"content": f"What is the meaning of life, the universe, and everything?"},

])

print(response['choices'][0]['message']['content'])

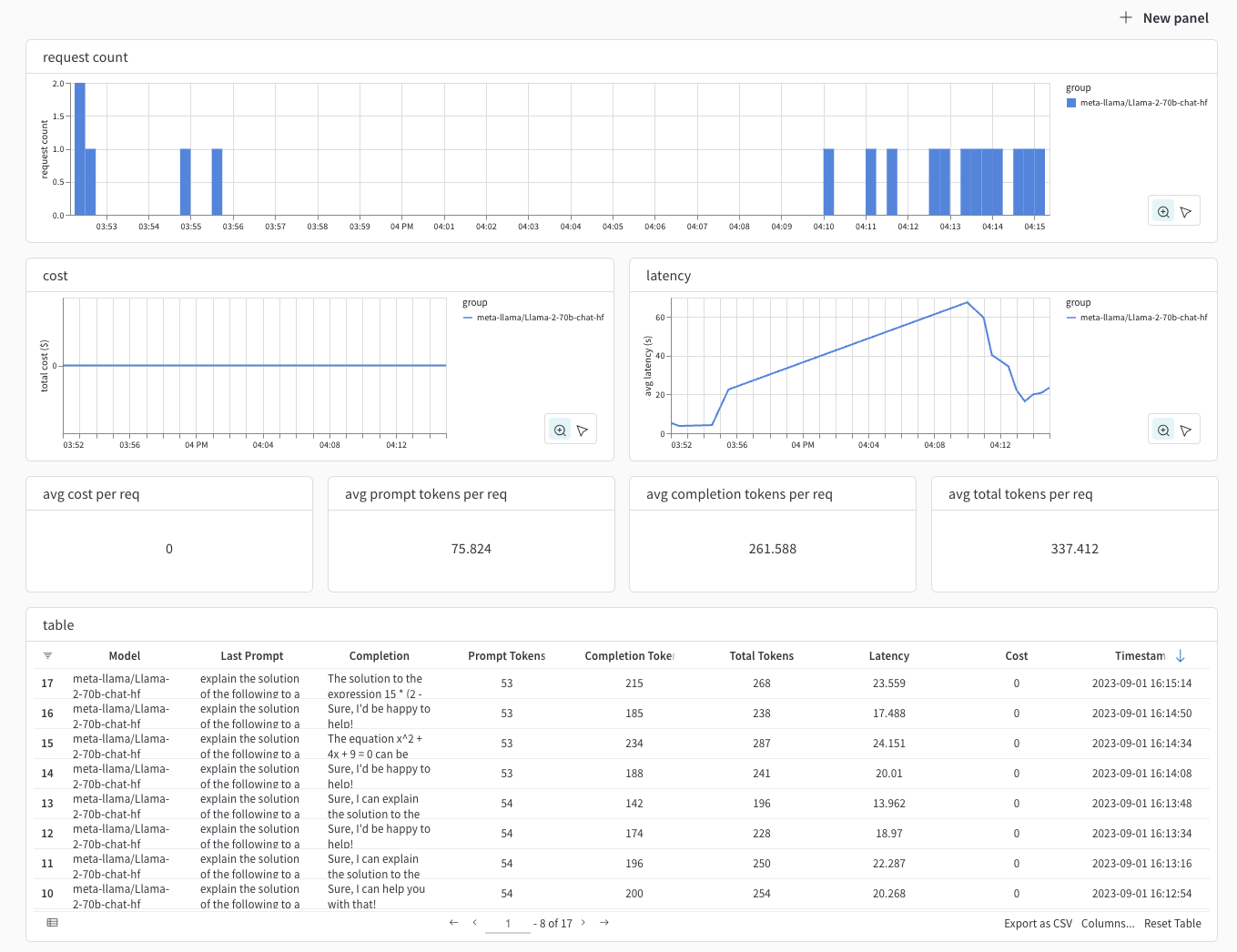

The following is a screenshot of the Weave dashboard. You can see that WandB records all prompts and reponses together with number of tokens, latency, timestamp, etc.

Another feature of Weave is to factor out parameters of interest and track them as attributes on the logged record. WandB tracks the "system prompt" separately from the "prompt template" and the "equation" parameter. Print the full structured response from the ChatCompletion call.

system_prompt = "you always write in bullet points"

prompt_template = 'solve the following equation step by step: {equation}'

params = {'equation': '4 * (3 - 1)'}

openai.ChatCompletion.create(

api_base="https://console.endpoints.anyscale.com/m/v1",

api_key=ANYSCALE_ENDPOINT_TOKEN,

model="meta-llama/Llama-2-70b-chat-hf",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": prompt_template.format(**params)},

],

# You can add additional attributes to the logged record.

# See the monitor_api notebook for more examples.

monitor_attributes={

'system_prompt': system_prompt,

'prompt_template': prompt_template,

'params': params

})