Global Resource Min/Max

This version of the Anyscale docs is deprecated. Go to the latest version for up to date information.

This feature will be deprecated soon. Please use the min_resources and max_resources fields in the compute config to define global minimum and maximum resource values instead.

The global resource min/max Smart Instance Manager feature allows defining minimum and maximum values on resources across worker node types. This feature offers a range of capabilities, such as:

- An Anyscale Cloud has access to two different types of GPUs. A given workload can use either GPU.

- A particular workload needs 1,500 CPUs and needs to run on spot instances to save costs. Several instance types will support this workload, but spot instance quotas and availability limit the number of each instance type.

- A training job requires at least 10 GPUs and, at most, 20 GPUs. It needs to use a specific type of GPU, but it can run on nodes with varying numbers of GPUs.

The feature is enabled for Ray 2.7+ and nightly images built after Aug 22, 2023.

Usage

There are two primary methods of working with this feature: a compute config can specify the number of GPUs or CPUs required, allowing the Anyscale Smart Instance Manager to identify the number of nodes to launch from one or more worker node types; or a compute config can specify a custom resource on the worker nodes, using that custom resource value to identify the number of nodes needed to satisfy the minimum and maximum.

Examples

Multiple GPU types

Requirements: The workload can support multiple types of GPUs. To maximize cost savings, a spot instance should be used. The Smart Instance Manager should start one worker - either a T4 or A10/K80 instance as a spot instance.

- AWS (EC2)

- GCP (GCE)

- Create a new Compute Config in the Anyscale Console.

- Add two workers. One should be a

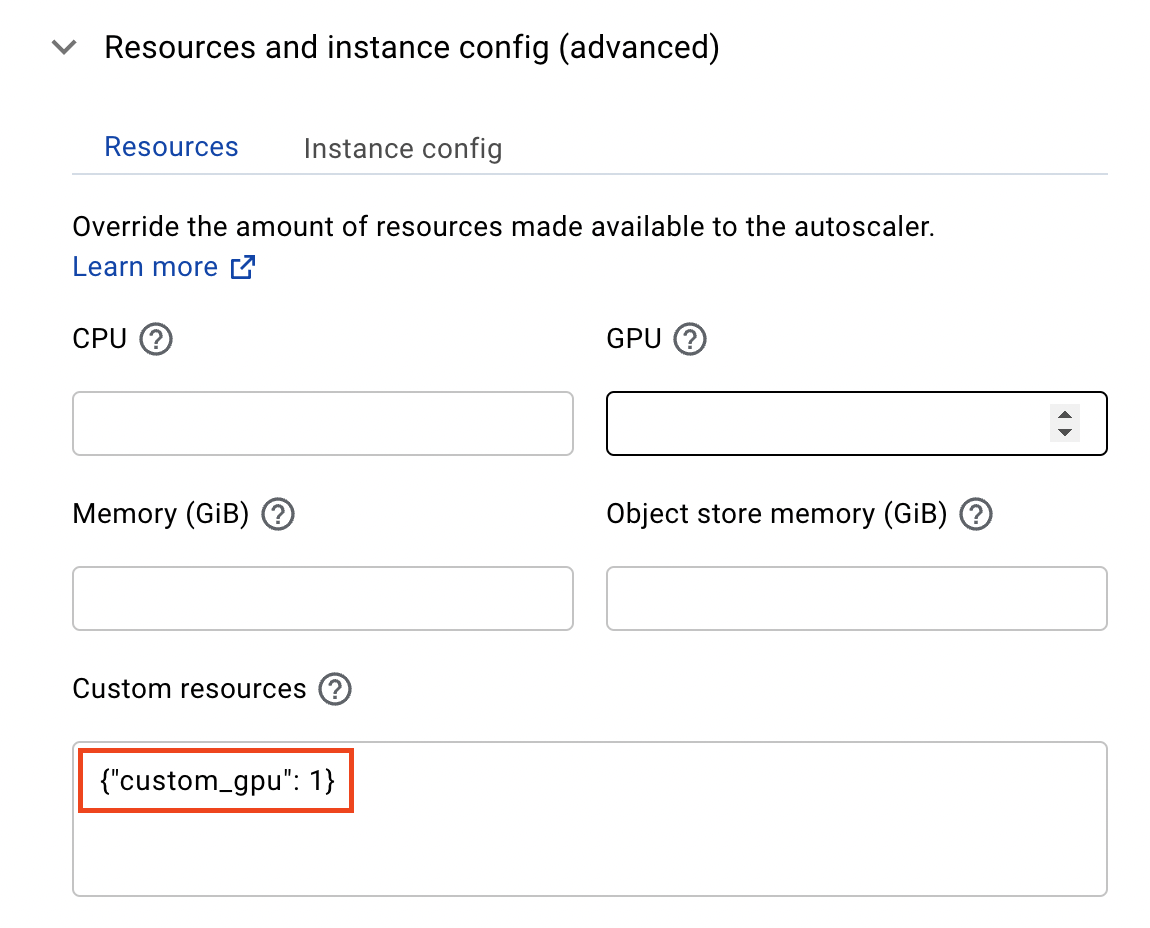

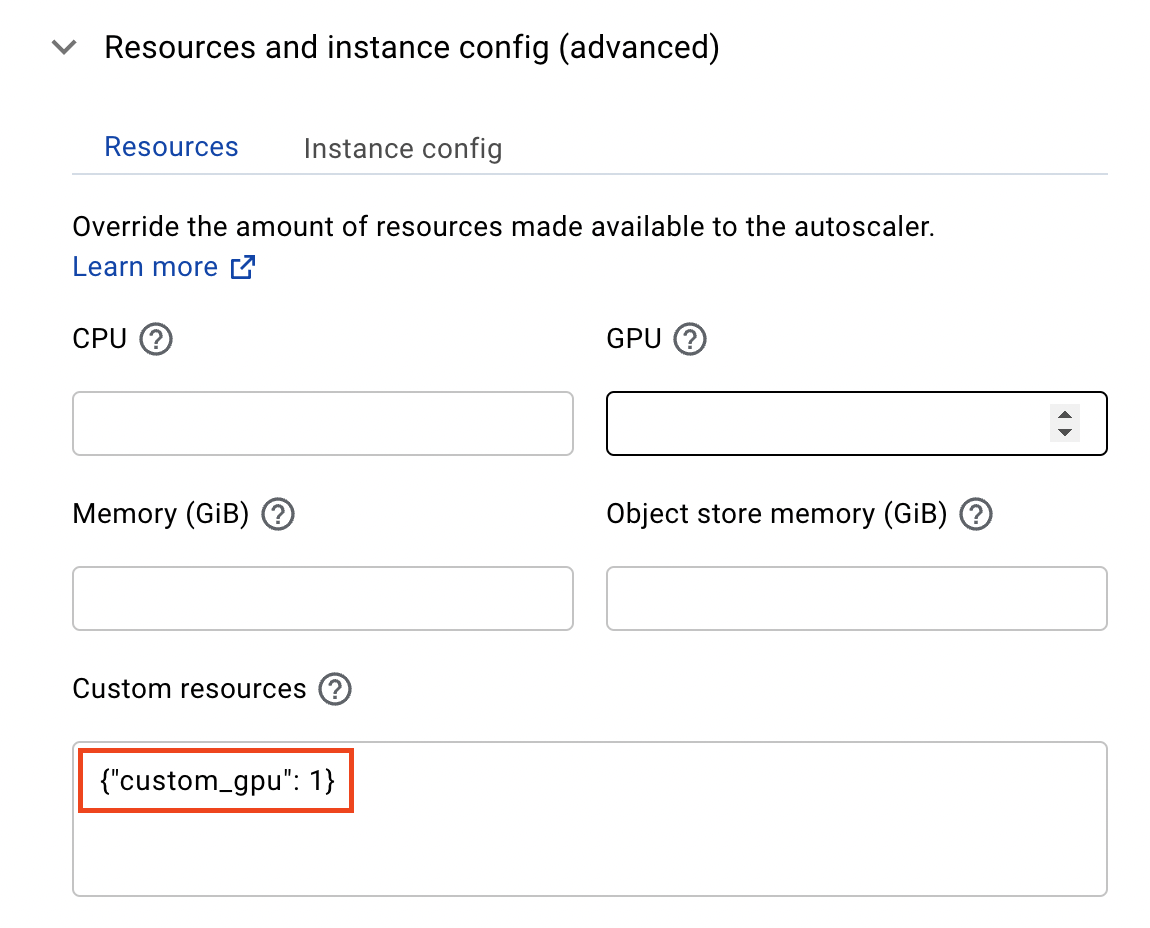

g4dn.4xlarge(T4 GPU) worker instance type, and the other should be ag5.4xlarge(A10 GPU) worker instance type. - In the Resources and instance config (advanced) section for both, set a custom resource for each Worker node type to be:

{"custom_resource": 1}

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"TagSpecifications": [

{

"Tags": [

{

"Key": "as-feature-cross-group-min-count-custom_resource",

"Value": "1"

}

],

"ResourceType": "instance"

}

]

}

- When the cluster is launched, the Autoscaler will ensure that a min of

custom_resource = 1is satisfied by attempting to launch the node groups that have thecustom_resourcespecified. In this case, one ofg4dn.4xlargeorg5.4xlargewill be launched.

- Create a new Compute Config in the Anyscale Console.

- Add two workers. One should be a

n1-standard-16-nvidia-t4-16gb-1(T4 GPU) worker instance type, and the other should be an1-standard-8-nvidia-k80-12gb-1(K80 GPU) worker instance type. - In the Resources and instance config (advanced) section for both, set a custom resource for each Worker node type to be:

{"custom_resource": 1}

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"instance_properties": {

"labels": {

"as-feature-cross-group-min-count-custom_gpu": "1"

}

}

}

- When the cluster is launched, the Autoscaler will ensure that a min of

custom_resource = 1is satisfied by attempting to launch the node groups that have thecustom_resourcespecified. In this case, one of T4 or A10/K80 will be launched.

If you prefer to use the Anyscale CLI or SDK, click here to view the Example #1 YAML.

- AWS (EC2)

- GCP (GCE)

cloud: anyscale_aws_cloud_useast1 # You may specify `cloud_id` instead

allowed_azs:

- us-east-2a

- us-east-2b

- us-east-2c

head_node_type:

name: head_node_type

instance_type: m5.2xlarge

worker_node_types:

- name: gpu_worker_1

instance_type: g4dn.8xlarge

resources:

custom_resources:

custom_resource: 1

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: g5.8xlarge

resources:

custom_resources:

custom_resource: 1

min_workers: 0

max_workers: 10

aws_advanced_configurations_json:

TagSpecifications:

- ResourceType: instance

Tags:

- Key: as-feature-cross-group-min-count-custom_resource

Value: "1"

cloud: anyscale_gcp_cloud_uscentral1 # You may specify `cloud_id` instead

allowed_azs:

- us-central1-a

- us-central1-b

- us-central1-c

- us-central1-f

head_node_type:

name: head_node_type

instance_type: n2-standard-32

worker_node_types:

- name: gpu_worker_1

instance_type: n1-standard-16-nvidia-t4-16gb-1

resources:

custom_resources:

custom_resource: 1

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: n1-standard-8-nvidia-k80-12gb-1

resources:

custom_resources:

custom_resource: 1

min_workers: 0

max_workers: 10

gcp_advanced_configurations_json:

instance_properties:

labels:

as-feature-cross-group-min-count-custom_resource: "1"

Multiple CPUs

Requirements: The workload needs a minimum of 150 CPUs, and a maximum of 500 CPUs. They should be spot instances for cost savings. Because quotas and spot availability are low, the Smart Instance Manager can choose from multiple instance types to get the CPUs required.

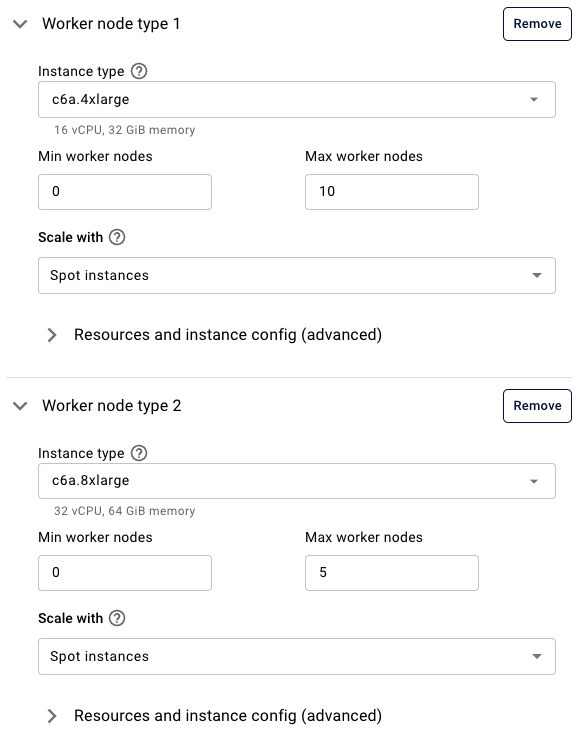

- AWS (EC2)

- GCP (GCE)

- Create three worker types with varying quantities of CPUs. Specify a minimum worker node count of

0and a maximum that will satisfy 150 CPUs. This example will usec6ainstance types

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"TagSpecifications": [

{

"Tags": [

{

"Key": "as-feature-cross-group-min-count-CPU",

"Value": "150"

},

{

"Key": "as-feature-cross-group-max-count-CPU",

"Value": "500"

}

],

"ResourceType": "instance"

}

]

}

- When the cluster is launched the Autoscaler will identify which spot instances are available to satisfy the minimum of 150 CPUs.

- As additional scaling events are triggered, the Autoscaler will continue to add worker nodes from the available worker types until a maximum of 500 CPUs are obtained.

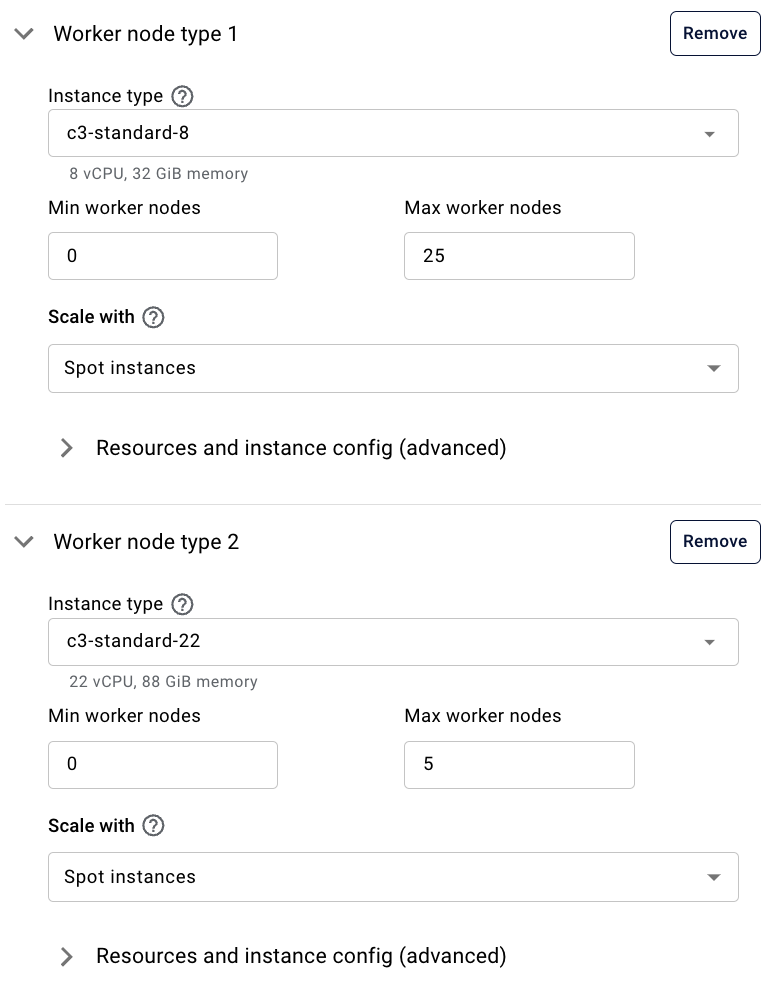

- Create three worker types with varying quantities of CPUs. Specify a minimum worker node count of

0and a maximum that will satisfy 150 CPUs. This example will usec3instance types

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"instance_properties": {

"labels": {

"as-feature-cross-group-min-count-CPU": "150",

"as-feature-cross-group-max-count-CPU": "500"

}

}

}

- When the cluster is launched the Autoscaler will identify which spot instances are available to satisfy the minimum of 150 CPUs.

- As additional scaling events are triggered, the Autoscaler will continue to add worker nodes from the available worker types until a maximum of 500 CPUs are obtained.

If you prefer to use the Anyscale CLI or SDK, click here to view the Example #2 YAML.

- AWS (EC2)

- GCP (GCE)

cloud: anyscale_aws_cloud_uswest2 # You may specify `cloud_id` instead

allowed_azs:

- us-west-2a

- us-west-2b

- us-west-2c

head_node_type:

name: head_node_type

instance_type: m5.2xlarge

worker_node_types:

- name: cpu_worker_1

instance_type: c6a.4xlarge

min_workers: 0

max_workers: 10

use_spot: true

- name: cpu_worker_2

instance_type: c6a.8xlarge

min_workers: 0

max_workers: 5

use_spot: true

- name: cpu_worker_3

instance_type: c6a.12xlarge

min_workers: 0

max_workers: 5

use_spot: true

aws_advanced_configurations_json:

TagSpecifications:

- ResourceType: instance

Tags:

- Key: as-feature-cross-group-min-count-CPU

Value: "150"

- Key: as-feature-cross-group-max-count-CPU

Value: "500"

cloud: anyscale-gcp-uscentral1 # You may specify `cloud_id` instead

region: us-central1

allowed_azs:

- us-central1-a

- us-central1-b

- us-central1-c

head_node_type:

name: head_node_type

instance_type: n2-standard-16

worker_node_types:

- name: cpu_worker_type_1

instance_type: c3-standard-8

min_workers: 0

max_workers: 25

use_spot: true

- name: cpu_worker_type_2

instance_type: c3-standard-22

min_workers: 0

max_workers: 5

use_spot: true

- name: cpu_worker_type_3

instance_type: c3-standard-44

min_workers: 0

max_workers: 5

use_spot: true

gcp_advanced_configurations_json:

instance_properties:

labels:

as-feature-cross-group-min-count-CPU: "150"

as-feature-cross-group-max-count-CPU: "500"

Multiple GPUs

Requirements: The workload needs a minimum of 10 GPUs, and a maximum of 20 GPUs. They should be spot instances. Because quotas and spot availability are low, the Smart Instance Manager can choose from multiple instance types to get the GPUs required.

- AWS (EC2)

- GCP (GCE)

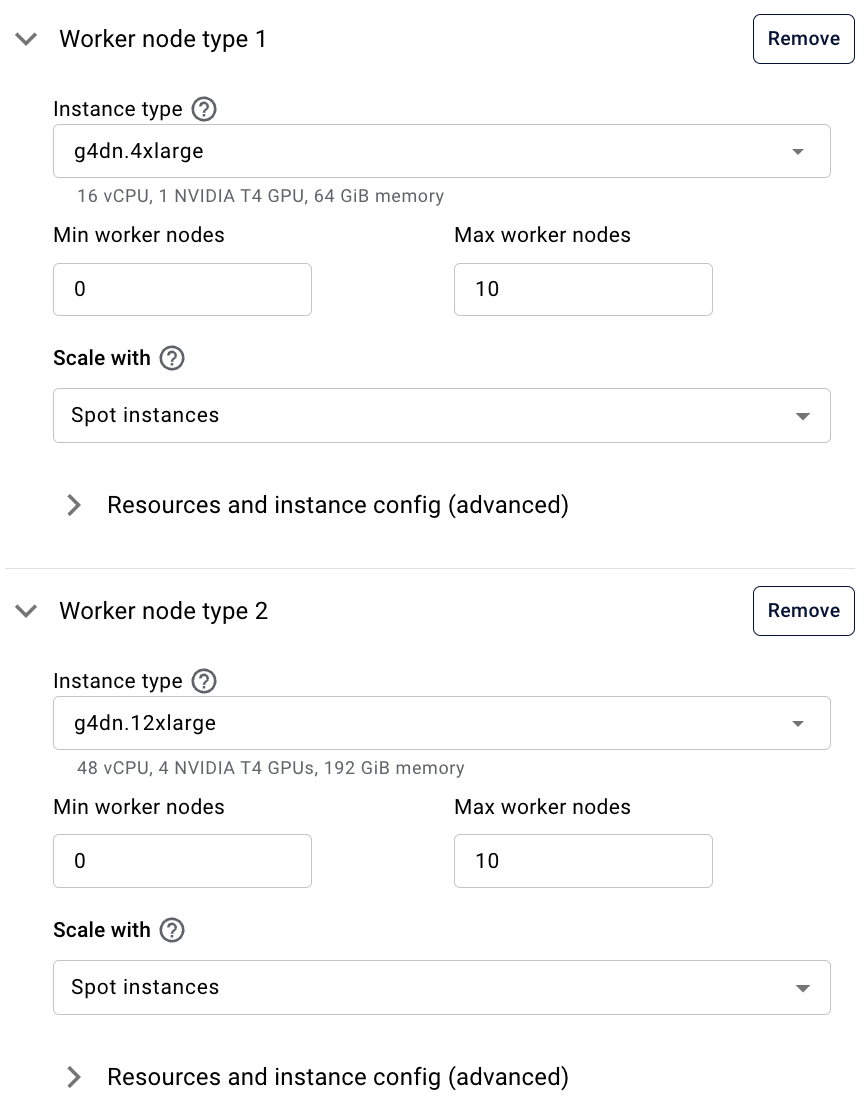

- Have two worker types,

g4dn.4xlarge(1 GPU) andg4dn.12xlarge(4 GPUs).

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"TagSpecifications": [

{

"Tags": [

{

"Key": "as-feature-cross-group-min-count-GPU",

"Value": "10"

},

{

"Key": "as-feature-cross-group-max-count-GPU",

"Value": "20"

}

],

"ResourceType": "instance"

}

]

}

- When the cluster is launched the Autoscaler will identify which spot instances are available to satisfy the minimum of 10 GPUs. This will be a combination of

g4dn.4xlarge(1 GPU each) andg4dn.12xlarge(4 GPU each). - As additional scaling events are triggered, the Autoscaler will continue to add worker nodes from the available worker types until a maximum of 20 GPUs are obtained.

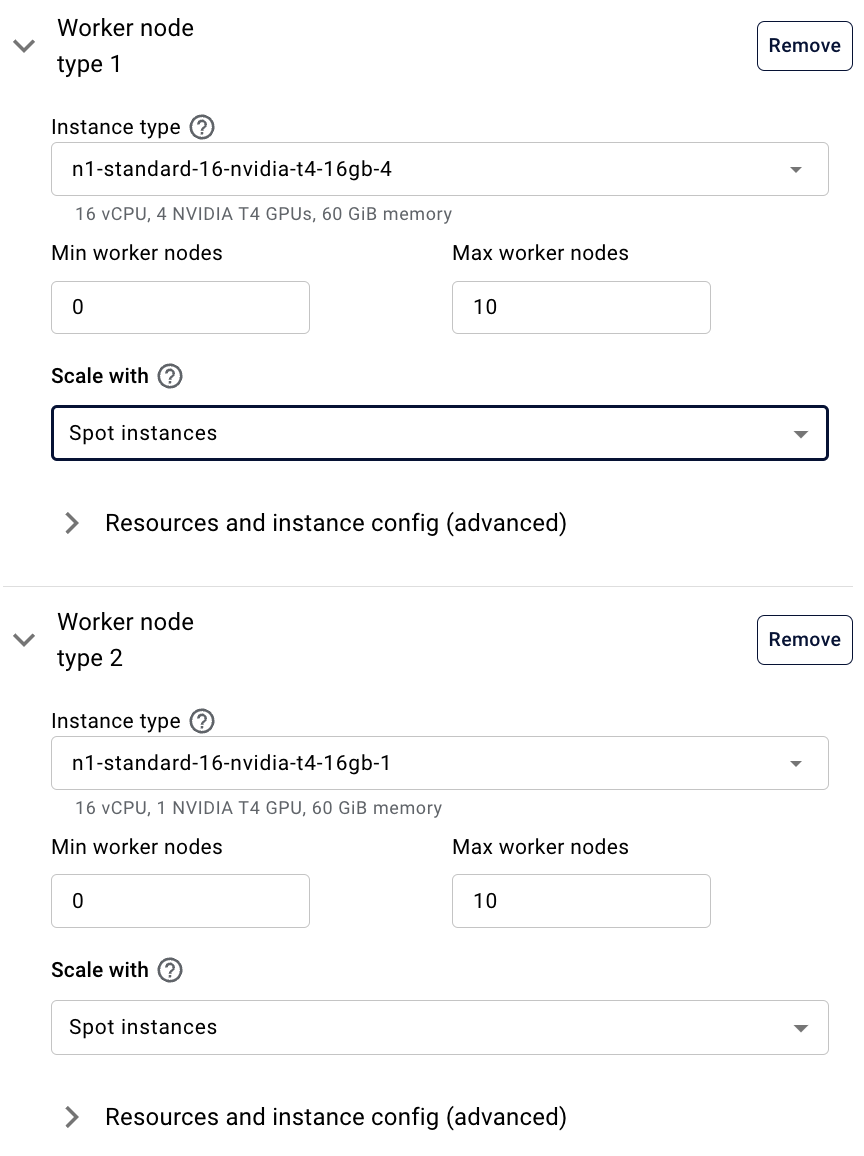

- Have two worker types,

n1-standard-16-nvidia-t4-16gb-1(1 GPU), andn1-standard-16-nvidia-t4-16gb-4(4 GPUs)

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

{

"instance_properties": {

"labels": {

"as-feature-cross-group-min-count-GPU": "10",

"as-feature-cross-group-max-count-GPU": "20"

}

}

}

- When the cluster is launched the Autoscaler will identify which spot instances are available to satisfy the minimum of 10 GPUs. This will be a combination of

n1-standard-16-nvidia-t4-16gb-1(1 GPU each) andn1-standard-16-nvidia-t4-16gb-4(4 GPU each). - As additional scaling events are triggered, the Autoscaler will continue to add worker nodes from the available worker types until a maximum of 20 GPUs are obtained.

If you prefer to use the Anyscale CLI or SDK, click here to view the Example #3 YAML.

- AWS (EC2)

- GCP (GCE)

cloud: anyscale_v2_aws_useast2 # You may specify `cloud_id` instead

allowed_azs:

- us-east-2a

- us-east-2b

- us-east-2c

head_node_type:

name: head_node_type

instance_type: m5.2xlarge

worker_node_types:

- name: gpu_worker_1

instance_type: g4dn.12xlarge

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: g4dn.4xlarge

min_workers: 0

max_workers: 10

aws_advanced_configurations_json:

TagSpecifications:

- ResourceType: instance

Tags:

- Key: as-feature-cross-group-min-count-GPU

Value: 10

- Key: as-feature-cross-group-max-count-GPU

Value: 20

cloud: anyscale_v2_gcp_uswest1 # You may specify `cloud_id` instead

allowed_azs:

- us-west1-a

- us-west1-b

- us-west1-c

head_node_type:

name: head_node_type

instance_type: n2-standard-32

worker_node_types:

- name: gpu_worker_1

instance_type: n1-standard-16-nvidia-t4-16gb-1

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: n1-standard-16-nvidia-t4-16gb-4

min_workers: 0

max_workers: 10

gcp_advanced_configurations_json:

instance_properties:

labels:

as-feature-cross-group-min-count-GPU: 10

as-feature-cross-group-max-count-GPU: 20

FAQ

Question: There are per node group min/max and global resource min/max. What are the priorities of them?

- The per worker group minimum has the highest priority, it will be satisfied regardless.

- The global resource minimum has lower priority than per worker group max or global resource max.

- If any of the minimums are not satisfied, the cluster is not healthy.