Function calling

Anyscale Private Endpoints supports function calling, which augments large language models (LLMs) with external tools. Introduced by OpenAI to support the creation of agents, Anyscale brings this capability to open source models so that these LLMs can call APIs like search, data querying, messaging, and more. Users define functions and their parameters, and then models dynamically select and execute the relevant APIs based on these constraints.

This example (view on GitHub) shows how to configure, deploy, and query a function calling model equipped with some common tools.

Step 0: Install dependencies

pip install openai==1.3.2

Step 1: Configure the deployment

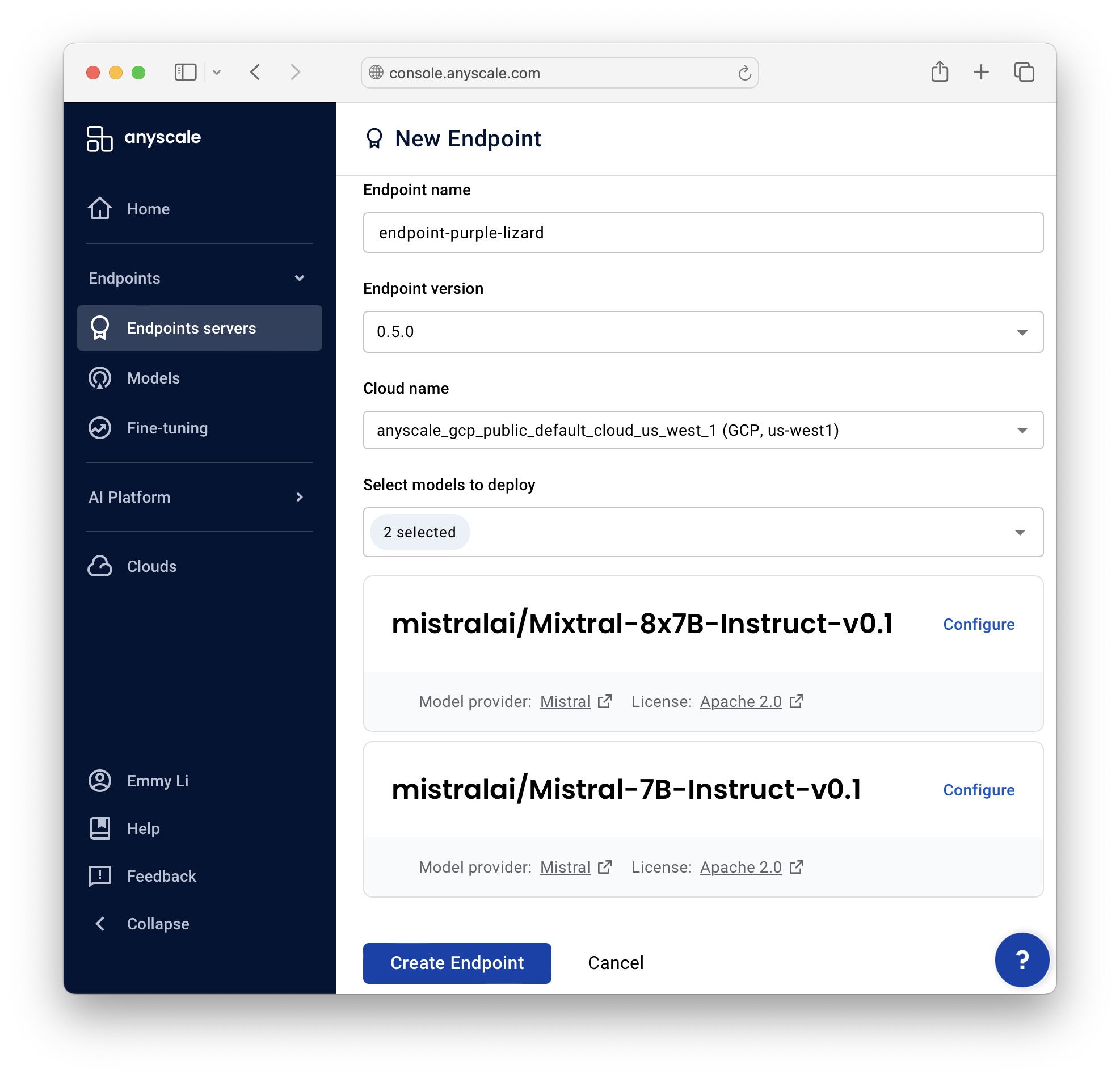

Follow the basic steps for creating an Anyscale Private Endpoint, and choose any of the following supported function calling models:

mistralai/Mistral-7B-Instruct-v0.1mistralai/Mixtral-8x7B-Instruct-v0.1

Then, before deploying, select Configure and then Advanced configuration to edit the configuration YAML file to include two parameters:

- Set

"enable_json_logit_processors": trueunderengine_kwargs. - Set

"standalone_function_calling_model": truein the top level configuration.

See this template for a valid example.

Step 2: Deploy the function calling model

Select Create Endpoint to start the service. Review the status of resources, autoscaling events, and metrics in the Monitor tab.

Step 3: Query the endpoint

Querying the function calling model involves defining functions, their parameters, and decision logic for tool usage. The example below demonstrates building an LLM application for accessing current weather data.

Initialize the client

Import the OpenAI library and set up the client with your Anyscale Private Endpoint base URL and API key.

from openai import OpenAI

client = OpenAI(

base_url="ANYSCALE_API_BASE",

api_key="ANYSCALE_API_KEY",

)

See the model serving "Get started" page for instructions on how to set your Anyscale API base URL and key as environment variables.

Define messages and tools

Messages simulate a conversation, and tools represent the callable functions.

messages = [

{"role": "system", "content": "You are helpful assistant."},

{"role": "user", "content": "What's the weather like in San Francisco?"}

]

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

},

}

]

Send the query

Make a request to the model specifying the tool choice. By setting tool_choice to auto, the LLM automatically selects the tool.

response = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.1",

messages=messages,

tools=tools,

tool_choice="auto",

)

print(response.choices[0].message)

You can specify a tool to use by setting tool_choice = {"type": "function", "function": {"name":"get_current_weather"}} or opt out with none.

Understanding the output

The output format includes the tool call information, such as the tool ID, type, function name, and arguments. This is an example output when the LLM uses a tool:

{

"role": "assistant",

"content": null,

"tool_calls": [

"id": "call_...",

"type": "function",

"function": {

"name": "get_current_weather",

"arguments": '{\n "location": "San Fransisco, USA",\n "format": "celsius"\n}'

}

],

}

For calls without tool use, the output looks like this:

{

"role": "assistant",

"content": "I don't know how to do that. Can you help me?",

}

Since the output is non-deterministic with non-zero temperature values, results may vary.

Integrating tool responses

You can feel the tool's response back into the LLM for subsequent queries. This process allows the model to generate contextually relevant replies based on the tool's output.

import json

# Extracting the tool response

tool_response = response.choices[0].message.tool_calls[0]

arguments = json.loads(tool_response.function.arguments)

tool_id = tool_response.id

tool_name = tool_response.function.name

# Simulate a tool response (e.g., fetching weather data)

# In a real scenario, replace this with a call to your weather service.

content = json.dumps({"temperature": 20, "unit": "celsius"})

# Append the simulated tool response to the conversation

messages.append({

"role": "assistant", "content": assistant_message,

})

messages.append({

"role": "tool", "content": content, "tool_call_id": tool_id, "name": tool_name

})

# Query the LLM with the updated conversation

response = client.chat.completions.create(

model="mistralai/Mixtral-8x7B-Instruct-v0.1",

messages=messages,

tools=tools,

tool_choice="auto",

)

print(response.choices[0].message)

The LLM should generate a context-aware response based on the tool's data:

{

"role": "assistant",

"content": "It's 20 degrees celsius in San Francisco.",

}

Customize and extend this example

This section provides notes to help you customize and extend the function calling capabilities of Anyscale Private Endpoints.

- Single function calls: These function calling models endpoints support single function calls. They don't facilitate parallel or nested function calls. This limitation is important to consider when designing complex or multi-step external interactions.

- Sampling parameters: The

top_pandtop_ksampling parameters are incompatible with function calling because of the desire for deterministic outputs in function calling scenarios, as opposed to probabilistic approaches typical in other LLM usages. - Repeated tool call troubleshooting: The LLM may sometimes repeat the tool call or incorrectly cite the

tool_id. To prevent this, either set thetool_choicetononeor refine the prompt to provide clearer instructions to the model. - Finish reasons: The default finish reason applies unless the LLM uses a tool, in which case it switches to

tool_calls.