Get started with Anyscale

Get started with Anyscale

This page provides an overview of getting started on Anyscale.

1. Join Anyscale

- Contact your organization's admin and request an invitation.

- Use the email invitation link to register as a user in the organization.

If you are the first Anyscale user on your team and don't have an Anyscale organization, see Get started for admins to create and set up your Anyscale organization.

2. Try it out

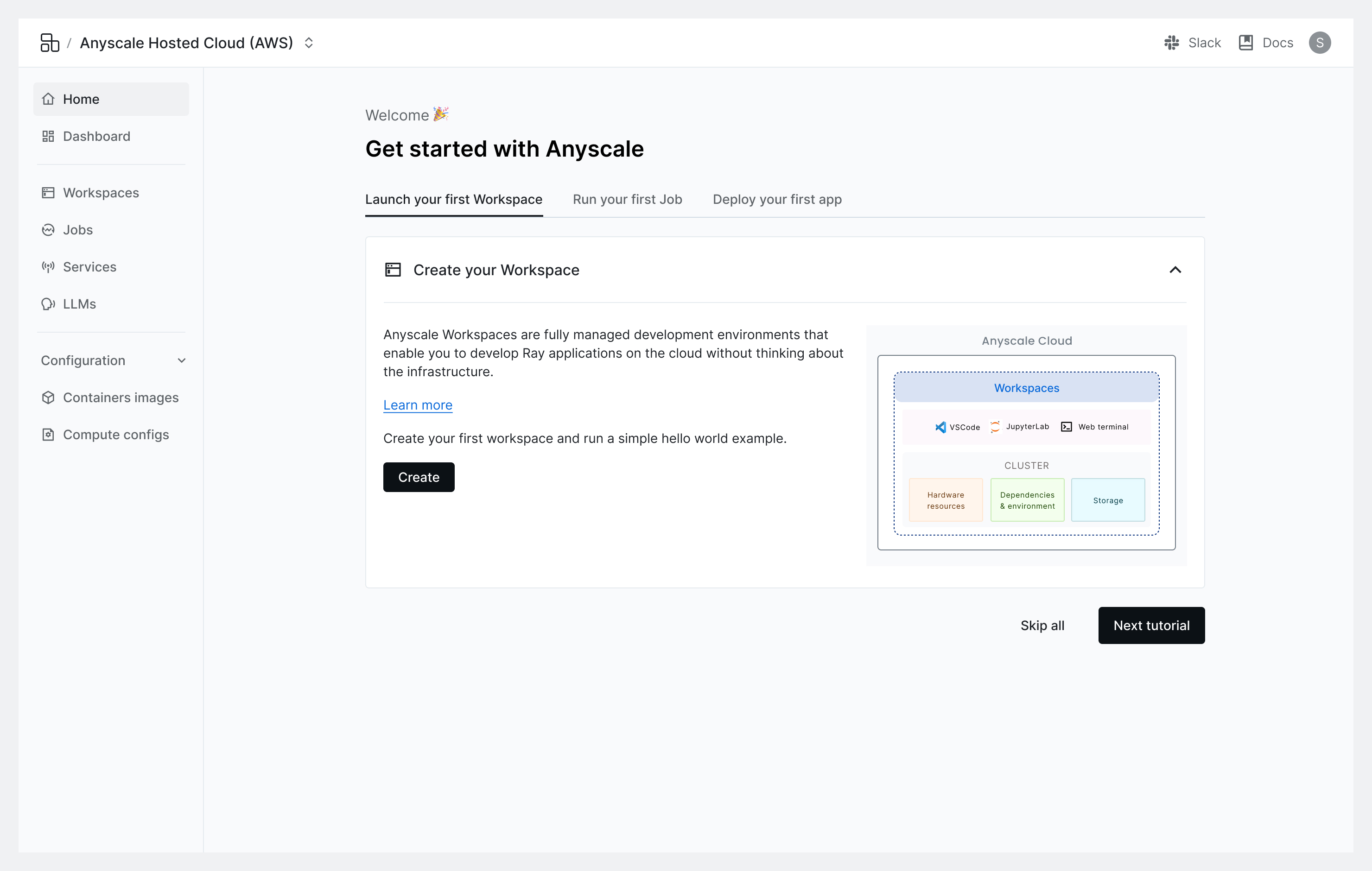

Once you've joined an organization and logged in, follow the quickstart tutorial in the console to learn about the platform.

3. Set up your environment

To programmatically interact with Anyscale outside the console and from your terminal, install the Anyscale CLI. This step is optional if you only plan to use the Anyscale console.

Step 1: Install the Anyscale CLI and Python client package

pip install -U anyscale

Step 2: Authenticate the CLI

To access Anyscale's services, you need to obtain an API key that verifies

your identity. Running the following command fetches and updates the

token in the local credential file ~/.anyscale/credentials.json:

anyscale login

You can also manually generate your API key through the console and set it in the ANYSCALE_CLI_TOKEN environment variable. See Manage API keys.