Global Resource Min/Max Compute Configs User Guide

Autoscaler is a component that seamlessly optimizes running nodes based on real-time workload and resource utilization. It ensures that the system's performance remains both efficient and cost-effective. By specifying the min/max workers for each node type, the Autoscaler can upscale and downscale dynamically based on real-time workload.

Global resource min/max provides more flexibility by allowing the user to specify a global min and/or max on a custom resource. This is a general capability that provides numerous capabilities, examples are:

- [Example #1] You have access to two GPU node types, T4 and A10G but prefer to use T4 whenever available. However when T4 is not available, you are fine with using A10G instead.

- [Example #2] You have access to two GPU node types, 4 x T4 and 1 x T4. Your workload requires at least 10 GPUs in total, and at most 20 GPUs, and the combination of these two node types doesn't matter.

How to use

The feature is enabled for Ray 2.7+ and nightly images built after Aug 22, 2023.

Example #1 - Via the Console

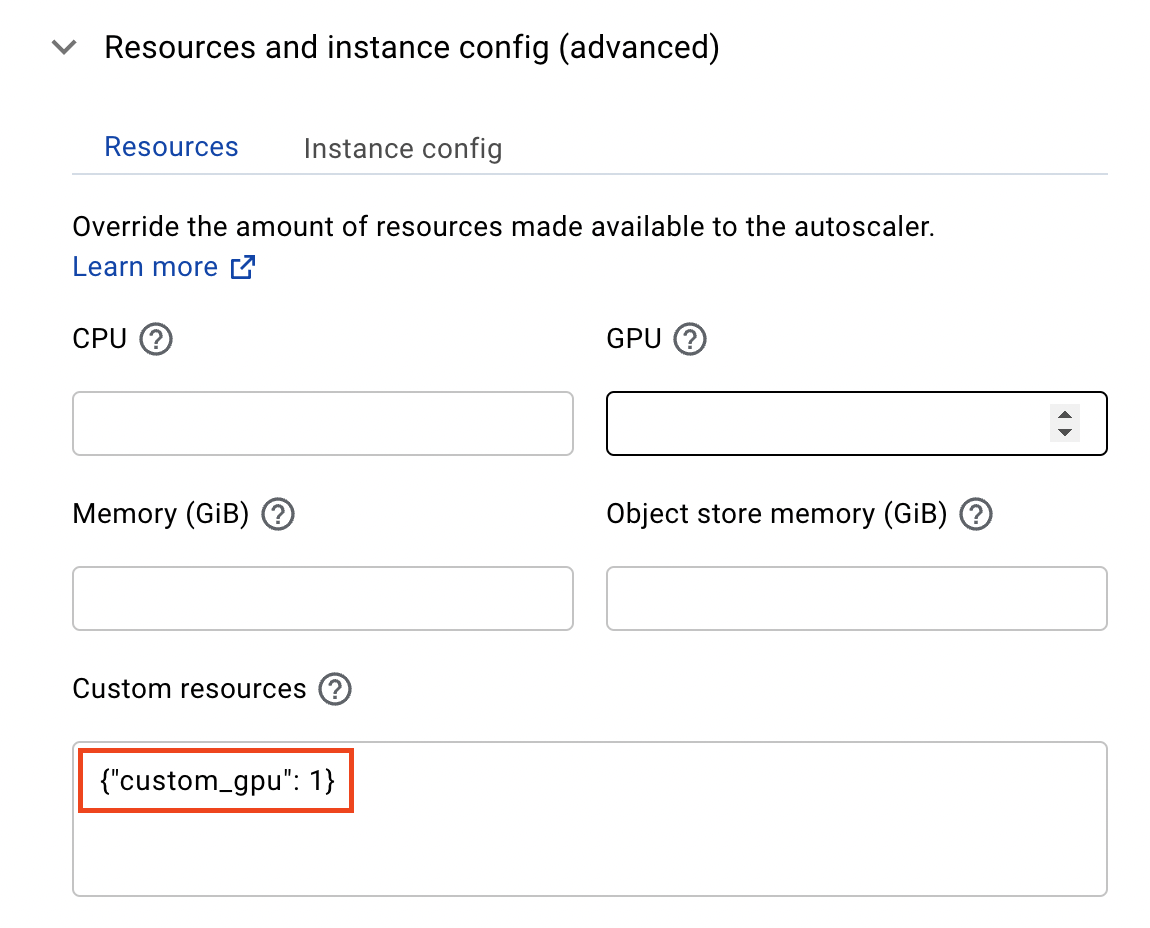

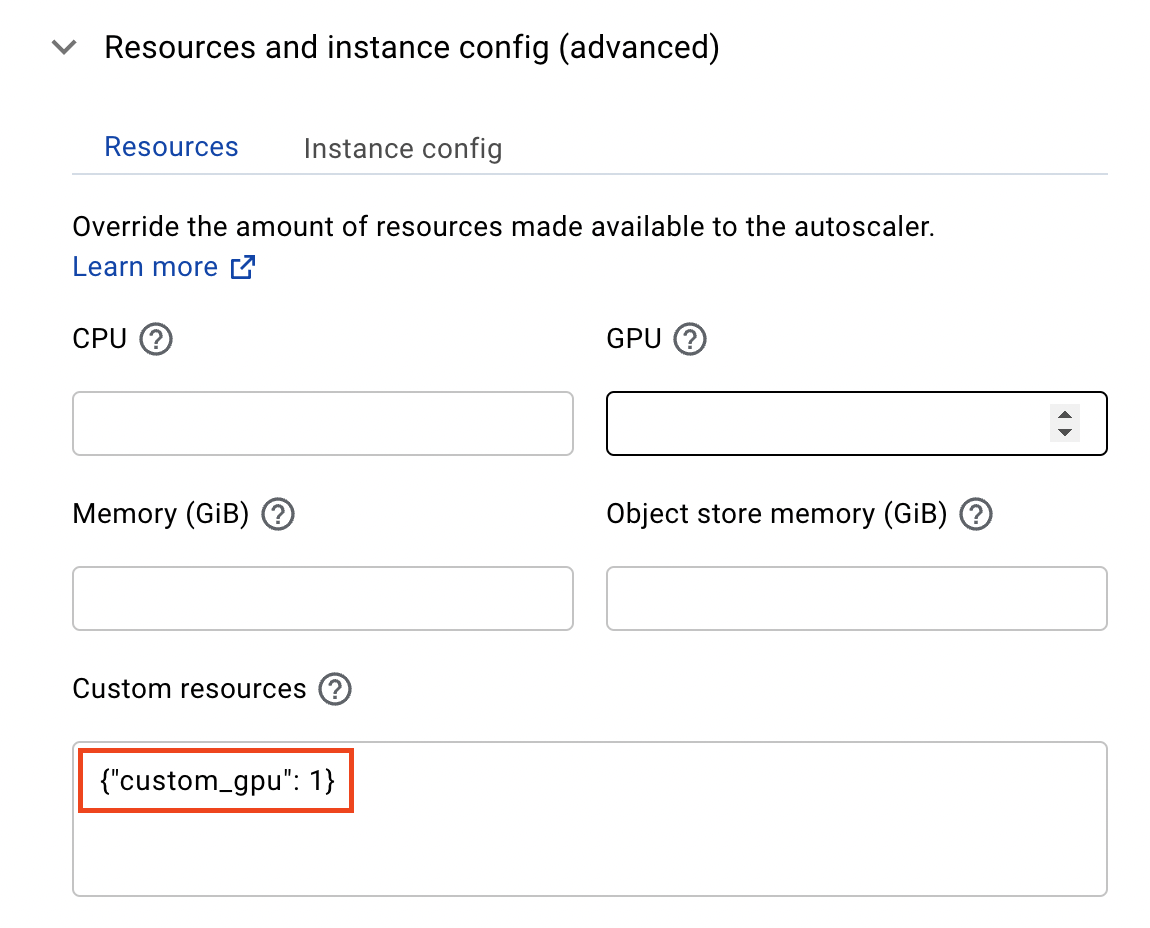

- Have two worker types, T4 and A10G. Set custom resource for each to be:

{"custom_gpu": 1}

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

- AWS (EC2)

- GCP (GCE)

{

"TagSpecifications": [

{

"Tags": [

{

"Key": "as-feature-cross-group-min-count-custom_gpu",

"Value": "1"

}

],

"ResourceType": "instance"

}

]

}

{

"instance_properties": {

"labels": {

"as-feature-cross-group-min-count-custom_gpu": "1"

}

}

}

- When the cluster is launched, the Autoscaler will ensure that a min of

custom_gpu = 1is satisfied by attempting to launch the node groups that have thecustom_gpuspecified. In this case, one of T4 or A10G will be launched.

Example #1 - Via SDK

compute_config.yaml

cloud: anyscale_v2_default_cloud_vpn_us_east_2 # You may specify `cloud_id` instead

allowed_azs:

- us-east-2a

- us-east-2b

- us-east-2c

head_node_type:

name: head_node_type

instance_type: m5.2xlarge

worker_node_types:

- name: gpu_worker_1

instance_type: g4dn.8xlarge

resources:

custom_resources:

custom_gpu: 1

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: g5.8xlarge

resources:

custom_resources:

custom_gpu: 1

min_workers: 0

max_workers: 10

aws:

TagSpecifications:

- ResourceType: instance

Tags:

- Key: as-feature-cross-group-min-count-custom_gpu

Value: 1

Example #1 - Python SDK Code

import yaml

from anyscale.sdk.anyscale_client.models import CreateClusterCompute

from anyscale import AnyscaleSDK

sdk = AnyscaleSDK()

with open('compute_config.yaml') as f:

compute_configs = yaml.safe_load(f)

# If your config file contains `cloud`, use this to get the `cloud_id`

if "cloud" in compute_configs:

compute_configs["cloud_id"] = sdk.search_clouds(

{"name": {"equals": compute_configs["cloud"]}}

).results[0].id

del compute_configs["cloud"]

config=sdk.create_cluster_compute(CreateClusterCompute(

name="my-cluster-compute",

config=compute_configs

))

Example #2 - Via the Console

- Have two worker types, 4 x T4 and 1 x T4. Set custom resource for 4 x T4 and 1 x T4 to be

{"custom_gpu": 4}and{"custom_gpu": 1}, respectively.

- In the cluster-wide Advanced Configurations input box, specify the following JSON key value:

- AWS (EC2)

- GCP (GCE)

{

"TagSpecifications": [

{

"Tags": [

{

"Key": "as-feature-cross-group-min-count-custom_gpu",

"Value": "10"

},

{

"Key": "as-feature-cross-group-max-count-custom_gpu",

"Value": "20"

}

],

"ResourceType": "instance"

}

]

}

{

"instance_properties": {

"labels": {

"as-feature-cross-group-min-count-custom_gpu": "10",

"as-feature-cross-group-max-count-custom_gpu": "20"

}

}

}

- When the cluster is launched, the Autoscaler will ensure that a min count of 10

custom_gpuis satisfied by attempting to launch the node groups that have thecustom_gpuspecified. In this case, a combination of 4 x T4 and 1 x T4 will be launched with a min of 10 T4 GPUs. - When the workload requires additional GPUs, the max count of 20

custom_gpuwill be respected when the Autoscaler adds worker nodes.

Example #2 - Via SDK

compute_config.yaml

cloud: anyscale_v2_default_cloud_vpn_us_east_2 # You may specify `cloud_id` instead

allowed_azs:

- us-east-2a

- us-east-2b

- us-east-2c

head_node_type:

name: head_node_type

instance_type: m5.2xlarge

worker_node_types:

- name: gpu_worker_1

instance_type: g4dn.12xlarge

resources:

custom_resources:

custom_gpu: 4

min_workers: 0

max_workers: 10

use_spot: true

- name: gpu_worker_2

instance_type: g4dn.4xlarge

resources:

custom_resources:

custom_gpu: 1

min_workers: 0

max_workers: 10

aws:

TagSpecifications:

- ResourceType: instance

Tags:

- Key: as-feature-cross-group-min-count-custom_gpu

Value: 10

- Key: as-feature-cross-group-max-count-custom_gpu

Value: 20

Example #2 - Python SDK Code

import yaml

from anyscale.sdk.anyscale_client.models import CreateClusterCompute

from anyscale import AnyscaleSDK

sdk = AnyscaleSDK()

with open('compute_config.yaml') as f:

compute_configs = yaml.safe_load(f)

# If your config file contains `cloud`, use this to get the `cloud_id`

if "cloud" in compute_configs:

compute_configs["cloud_id"] = sdk.search_clouds(

{"name": {"equals": compute_configs["cloud"]}}

).results[0].id

del compute_configs["cloud"]

config=sdk.create_cluster_compute(CreateClusterCompute(

name="my-cluster-compute",

config=compute_configs

))

FAQ

Question: There are per node group min/max and global resource min/max. What are the priorities of them?

- per node group min is the highest priority, it will be satisfied regardless.

- global resource min has lower priority than per node group max or global resource max.

- If any of the min’s is not satisfied, the cluster is not healthy.