Compute configurations

A compute configuration defines the virtual hardware used by a cluster. These resources make up the shape of the cluster and the cluster's setup for running applications and workloads in a distributed computing environment. If you are familiar with a Ray cluster.yaml, an Anyscale compute config is a superset of that comprehensive configuration. A compute config allows you to control the following:

- Instance types: Virtual machine instance types for head and worker nodes.

- Network settings: Network interfaces, including subnet settings, security groups, regions, and private/public IP options.

- Storage options: Node-attached storage, including disk types and sizes.

- Scaling parameters: Minimum and maximum worker node counts to determine scaling behavior based on workload.

- Spot instance configuration: Spot instances for cost savings, and related enhancements (for example, fallback to on-demand instances when spot instances are not available).

- Cloud-specific advanced configurations: Provider-specific configurations, such as advanced networking and service integrations for clouds like AWS or GCP.

- Auto-select worker nodes: beta feature allows defining the accelerator type in the Ray application. The Anyscale autoscaler selects the correct instances based on availability.

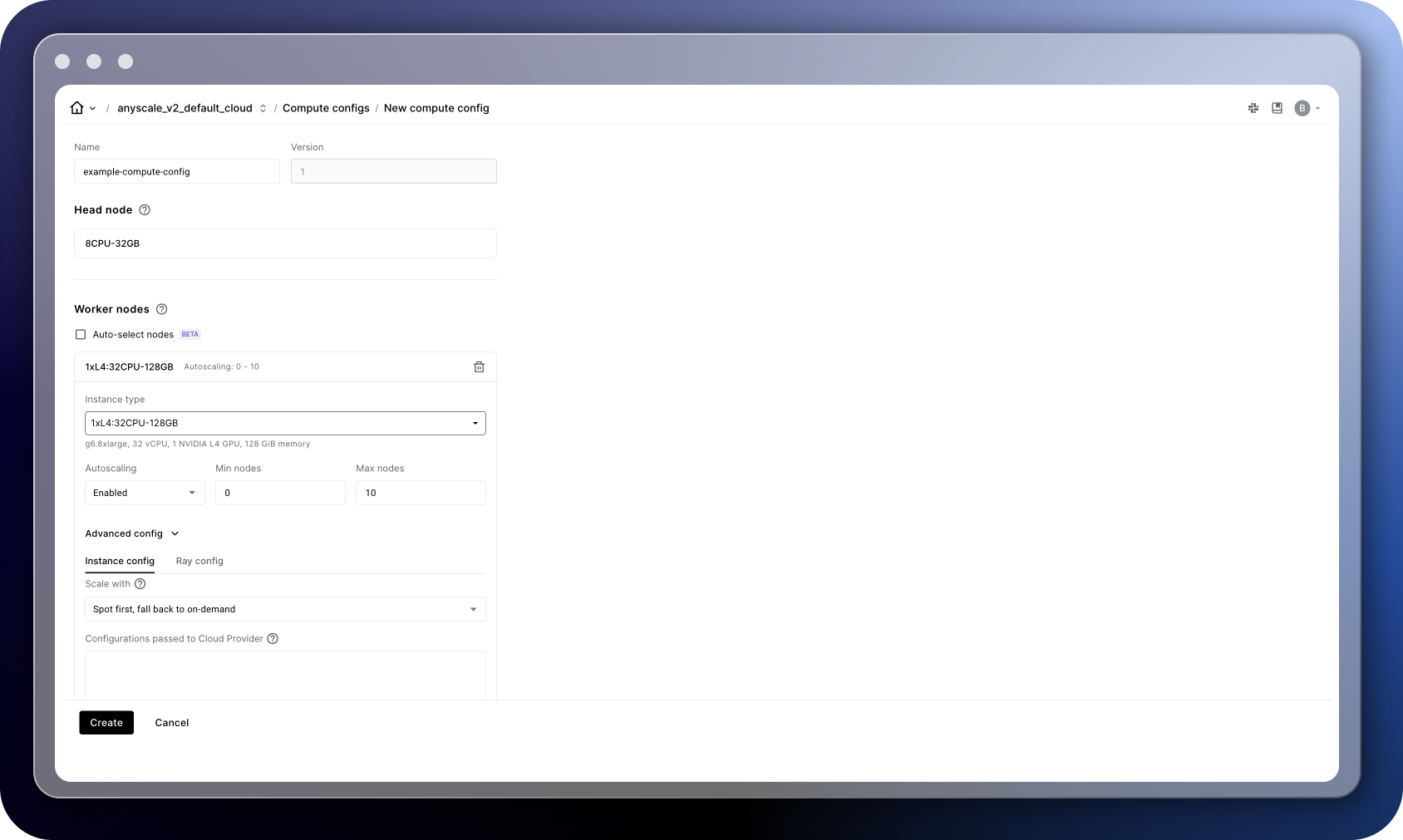

Create a compute config

You can create a compute config with one of three options: the Web UI, CLI, or the Python SDK. The CLI requires a YAML file, which allows you to version control compute configurations over time.

Versions

Anyscale supports versioning of compute configs, allowing easy updating of the compute resource requirements for a workload over time.

Supported instance types

Anyscale supports a wide variety of machine instance types. For a complete overview, see Instance types.

Cloud specific configurations

Cloud specific configurations are only available with customer-hosted Anyscale Clouds.

Managing capacity reservations

Securing certain instance types from cloud providers can sometimes be challenging due to high demand or limited availability. With Anyscale, you can leverage your cloud provider capacity reservations, ensuring the availability of required node types for your workloads. To configure capacity reservations for a specific worker node type, modify the advanced configuration through the Web UI or by editing the compute config YAML file.

- AWS (EC2)

- GCP (GCE)

To add a reservation with the Web UI, navigate to a worker node and expand the Advanced config section. Under the Instance config tab, input the following JSON, substituting in your specific reservation ID:

{

"CapacityReservationSpecification": {

"CapacityReservationTarget": {

"CapacityReservationId": "RESERVATION_ID"

}

}

}

Expand for a sample YAML file that you can use with the Anyscale CLI/SDK

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_ACCELERATED

min_nodes: MIN_NODES

max_nodes: MAX_NODES

advanced_instance_config:

CapacityReservationSpecification:

CapacityReservationTarget:

CapacityReservationId: RESERVATION_ID

For additional details on utilizing capacity reservations on AWS, see the AWS Documentation.

To add a reservation with the Web UI, navigate to a worker node and expand the Advanced config section. Under the Instance config tab, input the following JSON, substituting in your specific reservation name:

{

"instanceProperties": {

"reservationAffinity": {

"consumeReservationType": "SPECIFIC_RESERVATION",

"key": "compute.googleapis.com/reservation-name",

"values": ["RESERVATION_NAME"]

}

}

}

Expand for a sample YAML file that you can use with the Anyscale CLI/SDK

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_ACCELERATED

min_nodes: MIN_NODES

max_nodes: MAX_NODES

advanced_instance_config:

instanceProperties:

reservationAffinity:

consumeReservationType: SPECIFIC_RESERVATION

key: compute.googleapis.com/reservation-name

values: [RESERVATION_NAME]

For additional details on utilizing open reservations in Google Cloud, see the Google Cloud documentation.

Changing the default disk size

The default disk size for all nodes in an Anyscale cluster is 150 GB. You can change the default disk size for the entire cluster or an individual worker node type.

- AWS (EC2)

- GCP (GCE)

To modify the default disk size from the Web UI, use the Advanced configuration section for the Worker node or the Advanced settings section for the entire cluster. This example increases the default to 500 GB.

{

"BlockDeviceMappings": [

{

"Ebs": {

"VolumeSize": 500,

"VolumeType": "gp3",

"DeleteOnTermination": true

},

"DeviceName": "/dev/sda1"

}

]

}

Expand for a sample YAML file that you can use with the Anyscale CLI/SDK

This sample YAML will modify the disk for all nodes in the Anyscale cluster.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_ACCELERATED

min_nodes: MIN_NODES

max_nodes: MAX_NODES

advanced_instance_config:

BlockDeviceMappings:

- Ebs:

- VolumeSize: 500

VolumeType: gp3

DeleteOnTermination: true

DeviceName: "/dev/sda1"

To modify the default disk size from the Web UI, use the Advanced configuration section for the Worker node or the Advanced settings section for the entire cluster. This example increases the default to 500 GB.

{

"instance_properties": {

"disks": [

{

"boot": true,

"auto_delete": true,

"initialize_params": {

"disk_size_gb": 500

}

}

]

}

}

Expand for a sample YAML file that you can use with the Anyscale CLI/SDK

This sample YAML will modify the disk for all nodes in the Anyscale cluster.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_ACCELERATED

min_nodes: MIN_NODES

max_nodes: MAX_NODES

advanced_instance_config:

instanceProperties:

disks:

- boot: true

auto_delete: true

initialize_params:

- disk_size_gb: 500

Advanced features

Adjustable downscaling

The Anyscale platform automatically downscales worker nodes that have been idle for a given period. By default, the timeout period ranges from 30 seconds to 4 minutes and is dynamically adjusted for each node group based on the workload. For example, short, bursty workloads have shorter timeouts and more aggressive downscaling. Adjustable downscaling allows users to adjust this timeout value at the cluster-level based on their workload needs.

- Console

- CLI

To adjust the timeout value from the Anyscale console, use the Advanced features tab under the Advanced settings for the cluster. This example sets the timeout to 60 seconds for all nodes in the cluster.

{

"idle_termination_seconds": 60

}

To adjust the timeout value from the Anyscale CLI, use the flags field. This example YAML sets the timeout value to 60 seconds for all nodes in the cluster.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_WORKER

min_nodes: MIN_NODES

max_nodes: MAX_NODES

flags:

idle_termination_seconds: 30

Cross-zone scaling

Compute capacity is often difficult to find. With Anyscale, you can maximize your chances of provisioning your desired instance type by leveraging the cross-zone scaling feature. By default, all worker nodes are launched in the same availability zone. With cross-zone scaling enabled, Anyscale first attempts to launch worker nodes in existing zones, but if that fails, then tries the next-best zone (based on availability). This feature is recommended for workloads without heavy inter-node communication.

- Console

- CLI

To enable or disable this feature from the Anyscale console, use the "Enable cross-zone scaling" checkbox under the Advanced settings for the cluster.

To enable or disable this feature from the Anyscale CLI, use the enable_cross_zone_scaling field. This example YAML enables cross-zone scaling for all nodes in the cluster.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_WORKER

min_nodes: MIN_NODES

max_nodes: MAX_NODES

enable_cross_zone_scaling: true

Resource limits

Cluster-wide resource limits allow you to define minimum and maximum values for any resource across all nodes in the cluster. There are two common use cases for this feature:

- Specifying the maximum number of GPUs to avoid unintentionally launching a large number of expensive instances.

- Specifying a custom resource for specific worker nodes and using that custom resource value to limit the number of nodes of those types.

- Console

- CLI

To set the maximum number of CPUs and GPUs in a cluster from the Anyscale console, use the "Maximum CPUs" and "Maximum GPUs" fields under the Advanced settings for the cluster.

To set other resource limits, use the Advanced features tab under the Advanced settings for the cluster. To add a custom resource to a node group, use the Ray config tab under the Advanced config section for that node group.

This example limits the minimum resources to 1 GPU and 1 CUSTOM_RESOURCE and limits the maximum resources to 5 CUSTOM_RESOURCE.

{

"min_resources": {

"GPU": 1,

"CUSTOM_RESOURCE": 1

},

"max_resources": {

"CUSTOM_RESOURCE": 5,

}

}

To set resource limits for a cluster from the Anyscale CLI, use the min_resources and max_resources fields. This example YAML adds a custom resource to a worker node, limits the minimum resources to 8 CPU and 1 CUSTOM_RESOURCE, and limits the maximum resources to 1 GPU and 5 CUSTOM_RESOURCE.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_WORKER

resources:

CUSTOM_RESOURCE: 1

min_nodes: MIN_NODES

max_nodes: MAX_NODES

min_resources:

CPU: 8

CUSTOM_RESOURCE: 1

max_resources:

GPU: 1

CUSTOM_RESOURCE: 5

Worker group ranking (Developer Preview)

Some workloads may require prioritizing specific worker groups over others. This could involve utilizing on-demand capacity reservations before scaling out to use spot instances, or influencing the utilization of a particular node type before another.

Anyscale's default ranking prioritizes CPU-only worker groups above GPU worker groups, spot above on-demand, and availability of the instance type. With the instance ranking feature, you can instead specify a custom ranking order for the worker groups.

- Console

- CLI

To specify a custom worker group ranking from the Anyscale console, use the Advanced features tab under the Advanced settings for the cluster. This example specifies a ranking for 3 worker groups named worker-node-type-1, worker-node-type-2 and worker-node-type-3.

{

"instance_ranking_strategy": [

{

"ranker_type": "custom_group_order",

"ranker_config": {

"group_order": [

"worker-node-type-1",

"worker-node-type-2",

"worker-node-type-3"

]

}

},

]

}

To specify a custom worker group ranking from the Anyscale CLI, use the flags field. This example specifies a ranking for 2 worker groups named worker-node-1 and worker-node-2.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- name: worker-node-type-1

instance_type: INSTANCE_TYPE_WORKER

min_nodes: MIN_NODES

max_nodes: MAX_NODES

- name: worker-node-type-2

instance_type: INSTANCE_TYPE_WORKER

min_nodes: MIN_NODES

max_nodes: MAX_NODES

flags:

instance_ranking_strategy:

- ranker_type: custom_group_order

ranker_config:

group_order:

- worker-node-type-1

- worker-node-type-2

Disabling NFS mounts

Some large-scale jobs and services workloads may need to disable NFS mounts in order to bypass cloud-provider restrictions on the number of concurrent connections. For example, Filestore on GCP allows 500 concurrent connections by default. Note that an error will be raised if this flag is set on a non job or service cluster, as NFS is required for persistence in Anyscale Workspaces.

- Console

- CLI

To disable NFS mounts from the Anyscale console, use the Advanced features tab under the Advanced settings for the cluster.

{

"disable_nfs_mount": true,

}

To disable NFS mounts from the Anyscale CLI, use the flags field.

cloud: CLOUD_NAME

head_node:

instance_type: INSTANCE_TYPE_HEAD

worker_nodes:

- instance_type: INSTANCE_TYPE_WORKER

min_nodes: MIN_NODES

max_nodes: MAX_NODES

flags:

disable_nfs_mount: true